Face recognition is one of the mechanical turk’s canonical fortes — reliably identifying faces from a range of perspectives is something we do with out second though, but it proves to be excruciatingly tricky for computers. Why are our brains so good at this? How, exactly, do we work? How do computational strategies differ from biological ones?

Where do they overlap?

Behold: Chapter 15 of the Handbook of Face Recognition explores these questions in some detail, describing theories of how the human brain identifies and understands faces. A few highlights from the chapter follow:

First, a few semantic nuances:

Recognition: Have I seen this face before?

Identification: Whose face is it?

Stimulus factors: Facial features

Photometric factors: Amount of light, viewing angle

The Thatcher Illusion: Processing is biased towards typical views

Categorization

Beyond the basic physical categorizations — race, gender, age — we also associate emotional / personality characteristics with the appearance of a face. These use of these snap judgments was found to improve identification rates over those achieved with physical characteristics alone.

Prototype Theory of Face Recognition

Unusual faces were found to be more easily identified than common ones. The ability to recognize atypical faces implies a prototypical face against which others are compared. Therefore recognition may involved positioning a particular face relative to the average, prototypical face. The greater the distance, the higher the accuracy. (The PCA / eigenface model implements this idea.)

This also has implications for the other-race effect, which describes the difficulty humans have with identifying individuals of races to which they are not regularly exposed. However, the PCA approach to face recognition actually does well with the minority faces, since they exist outside the cluster of most faces and therefore have fewer neighbors and lower odds of misidentification.

Caricature

The prototype theory suggests that amplification of facial features should improve recognition and identification even further.

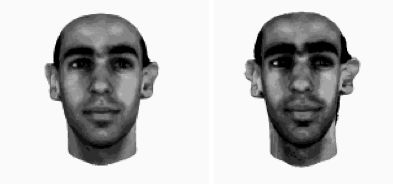

Here’s an example, the original face is at left, and a caricature based on amplifying the face’s distance from the average is at right:

This also opens the possibility of an anti-caricature, or anti-face, which involved moving in the opposite direction, back past the average, and amplifying the result.

The original face is at left, the anti-face is at right:

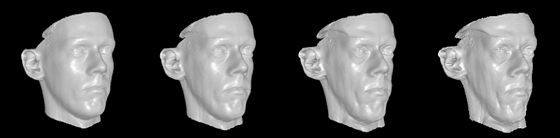

Interestingly, caricaturization also seems to age the subject. (Supporting the notion that age brings distinction:

Prosopagnosia

Prosopagnosia is a condition affecting some stroke / brain injury victims which destroys the ability to identify faces, while leaving other visual recognition tasks intact. This suggests that face identification and recognition is concentrated in one area of the brain, suggesting a modular approach to processing.

(Images: Handbook of Face Recognition)